Revista de Etologia

ISSN 1517-2805

Rev. etol. vol.12 no.1-2 São Paulo dez. 2013

Are dogs sensitive to the human's visual perspective and signs of attention when using a keyboard with arbitrary symbols to communicate?

Carine SavalliI; Briseida Dôgo de ResendeII; César AdesII, 1

IDepartamento de Políticas Públicas e Saúde Coletiva - Universidade Federal de São Paulo (UNIFESP), Brasil. R. Silva Jardim, 136 - Vl. Mathias - CEP: 11015-020, Santos, SP, Brasil. +55-11-976116131. E-mail: carine.savalli@unifesp.br

IIDepartamento de Psicologia Experimental do Instituto de Psicologia da Universidade de São Paulo. Av. Prof. Mello Moraes 1721 - Cidade Universitária - CEP: 05508-030. São Paulo, Brasil. E-mail: briseida@usp.br

ABSTRACT

This study investigated whether dogs take into account the human's direction of attention and visual field when they communicate by means of a keyboard with lexigrams. In order to test this, two dogs, Sofia and Laila, were given the choice between two keyboards, one visible and the other one non-visible from the experimenter's perspective. In one experiment, different barriers were positioned in such way so as to block the experimenter's visibility to one of the keyboards, and, in another experiment we manipulated human's signs of attention, like body orientation and visibility of the eyes. Sofia and Laila consistently preferred to use the keyboard that was in the human's visual field. Laila's choice was also influenced by the human's body orientation. Results suggested that the subjects are sensitive to the human's visual access to the keyboard when they use it to ask for food.

Keywords: Dog–human communication; keyboard; attention; visual field.

RESUMO

Este estudo investigou se cães levam em conta a direção da atenção e o campo visual do ser humano quando se comunicam por meio de um teclado com lexigramas. Para tanto, duas cadelas, Sofia e Laila, foram submetidas a uma situação em que podiam escolher entre dois teclados, um deles visível e outro não-visível sob a perspectiva do experimentador. Em um experimento, diferentes barreiras foram posicionadas de tal forma a bloquear a visibilidade do experimentador a um dos teclados, e, em outro, experimento manipulamos os sinais de atenção do ser humano, como orientação corporal e visibilidade dos olhos. Sofia e Laila preferiram consistentemente usar o teclado que estava dentro do campo visual do ser humano. A escolha de Laila também foi influenciada pela orientação corporal do ser humano. Os resultados sugerem que as duas são sensíveis ao acesso visual do ser humano ao teclado quando elas o usam para pedir comida.

Palavras-chave: Comunicação cão-ser humano; teclado; atenção; campo visual.

Introduction

Dogs take part in cooperative activities that require skills to monitor gestures and signs of attention of a human partner (Hare & Tomasello, 1999; Hare et al., 2002; Miklósi, 2007; Virányi et al., 2006). These skills are probably more developed in sheepdogs and gundogs who need to be in constant visual contact with their human handler while working (Gácsi et al. 2009), and can improve during ontogeny (Dorey, Udell, & Wynne, 2010; Riedel et al. 2008; Udell, & Wynne, 2010; Wynne, Udell, & Lord, 2008). Even as pets, dogs depend on visual contact with humans to monitor their desires and reactions as well as to appropriately respond to their gestures and signs (Miklósi et al. 1998; Miklósi et al., 2003; Rossi & Ades, 2008). Their promptness in monitoring human's attention can even overcome the one directed to their conspecifics (Range et al., 2009a; Range et al., 2009b).

Several studies showed that dogs are able to follow pointing gestures with the hand or arm as referential signs (Hare et al., 2002; Miklósi et al., 1998; Miklósi& Soproni, 2006). Although Agnetta, Hare & Tomasello (2000) found that in a two-choice task the human's gaze direction did not represent a significant effect in the dog's response, recent studies brought different conclusions and emphasized that dogs are able to follow human's gaze (Téglás et al., 2012). Kaminsky, Pitsch & Tomasello (2012) additionally suggested that dogs take into account what humans can or cannot see. In their experiment, dogs took forbidden food when it was dark, but not when it was illuminated, however it did not matter if a human observer was in the dark or not. This situation does not access whether or not dogs takes into account humans' visual field for solving the task. Other research show that body orientation, direction of the head and gaze are used as referential cues for objects and places, or, as an indication of human's attention to some region in the surroundings (Brauer, Call, & Tomasello, 2004; Call et al., 2003; Kaminsky et al., 2009; Soproni et al., 2001; Soproni et al., 2002; Virányi et al., 2004). These cues of attention can give information about human's possible reactions to something that is in a certain place or happens there. Additionally, the position of the human in relation to other objects in the surroundings can reveal the region that is visible to him. Two conditions regarding the human's visual perspective to some target can be relevant: the first being body orientation (facing or with the back turned) and gaze direction (Call et al., 2003; Gácsi et al., 2004; Virányi et al., 2004), and, the second being human's visual access to a target; barriers or objects that block visual access can indicate that the target is out of his visual field (Brauer et al., 2004; Kaminsky et al., 2009). Gácsi et al. (2004) suggested that body orientation is more easily recognized as a cue of human's visual attention than the eyes' visibility, however, Miklósi (2007) suggested that in front of a person with a blindfold covering the human's eyes, dogs usually react with some hesitation, this showing evidence that, in some way, they pay attention to the face of the human. There are also situations in which dogs prefer to avoid the human's gaze, what can also indicate that dogs are sensitive to the human's eyes (Soproni et al., 2001).

Brauer et al. (2004) showed that dogs try to eat forbidden food more frequently when there is a barrier blocking the experimenter's visual access to the food. They are influenced by the possibility of being seen whilst eating the food and also when they are just approaching it. More recently Brauer et al. (2012) found that dogs did not hide their approach to forbidden food when they could not see a human present, but they do prefer a silent approach. Moreover, Kaminsky et al. (2009) also showed that dogs are more prone to look for a toy that is in the visual access of the human than one that is behind an opaque barrier.

Monitoring attentional state and visual access is especially important for the purposes of communication between dog and human. Visual communication is more effective if the sender and recipient are able to share their attention. Communication involves discrimination of attentional states, and sometimes, requires this behavior to call attention of the recipient to the message, previous to the communication itself (Gaunet, 2008; Miklósi et al., 2000). Attention monitoring has been seen in different contexts, such as the communication between dogs and humans when requesting food (Gácsi et al., 2004; Virányi et al., 2004).

A question that arises from all these results is whether this ability of monitoring human's cues of attention and visual access is present in other communicative contexts as, for example, when a dog uses visual arbitrary signs to communicate with humans, as is cited in research by Rossi andAdes (2008). They studied a female mongrel, Sofia, who was trained to press keys with arbitrary signs (lexigrams) on a keyboard, to communicate her desires (walk, petting, toy, water, food, crate). The keyboard, which emitted a sound (in the experimenter's voice with the name of lexigram) after a key was pressed, was usually with in the potential visual field of the experimenter. Sofia used the keyboard socially, which means that she used it only when there was a human nearby, which reinforced the communicative function of the keyboard usage. Later, another mongrel, Laila, who lived with Sofia, spontaneously acquired the use of some keys probably by seeing Sofia using it, and was then submitted to less formal training.

Natural observations showed that, before using the keyboard, Sofia and Laila usually gazed at the human partner. This gaze could be a way of monitoring the human's signs of attention and his visual access to the keyboard while they were using it. The communication with the keyboard consisted, at first, of a visual communication by means of lexigrams, and the dogs's monitoring behavior suggested that they could be sensitive to the human's visual attention when they used the keyboard, even though it emitted a sound after a key was pressed. Therefore, the study as described here was planned to explore this hypothesis.

Two experimental conditions were designed to investigate whether the human's cues of attention were relevant for the dog in this context. Sofia and Laila could choose between two keyboards, and the visual attentional state of two assistants in relation to each keyboard was manipulated: firstly, with body orientation (facing and with the back turned), and secondly, with eye visibility (with or without a blindfold). We hypothesized that if there was attention monitoring present while using a keyboard, they would chose the keyboard in front of the attentive person. The other three experimental conditions were designed to verify whether the human's visual access to the keyboard was relevant for this special communication. Sofia and Laila were tested in a situation in which they could choose to use either of two identical, equidistant keyboards to ask for more food, one visible to the experimenter and another one blocked from the experimenter's view by a barrier. If visual access was really relevant in this communicative context, Sofia and Laila would choose to communicate using the visible keyboard.

Methods

Subjects

Two female mongrel dogs participated in this research: Sofia, 7 years old and Laila, 2 years old. Sofia had already taken part in one study using the keyboard (Rossi & Ades, 2008) and in another study to check comprehension (Ramos & Ades, 2012). Sofia and Laila lived together with their owner, had access to the keyboard at home and used it routinely.

Materials

All experiments took place at the Dog's Laboratory at the Institute of Psychology at the University of Sao Paulo. It has a 28m2 enclosed outside area.

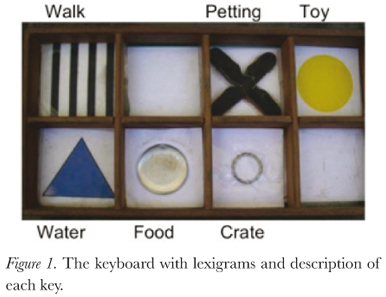

Two identical keyboards, with eight 6x6cm keys, were used (Rossi & Ades 2008, Figure 1). Six of these keys were labeled with an arbitrary colored sign (lexigram) and corresponded to possible requests from the dogs (walk, petting, toy, water, food, crate, Figure 1). Our research did not focus on the appropriateness of lexigram use, since this has already already performed by Rossi and Ades (2008), but rather, it was designed to investigate the dog's perception of the human's visual attention to the keyboard, we selected food as a stimulus considering that the food key was the one with the higher rate of correct usage. Therefore, this key's pressing was not analyzed. Since the vocal feedback emitted by the keyboard could confound the results regarding visual communication, for one month before the beginning of the experiments, both keyboards were only used in silent mode at the dog's home. This familiarization phase with the silent keyboards was intended to decrease the auditory influence in the visual communication by means of the lexigrams.

Information such as data and the registration of each use of the keyboard was video-recorded for all trials by means of a Panasonic SDR-H200 camera.

Procedure

Experimental conditions designed to explore the influence of the human's cues of attention during keyboard use

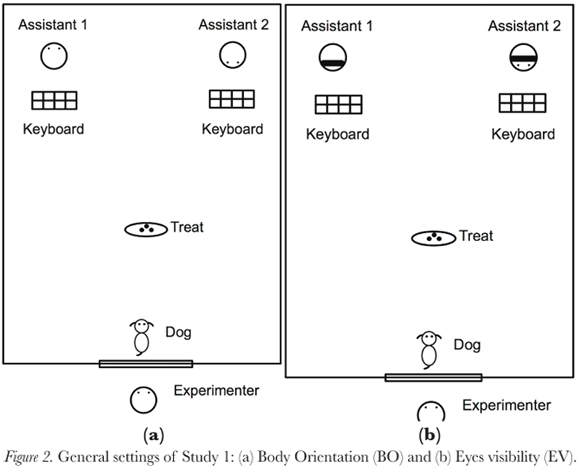

Body Orientation (BO): dogs could choose between two keyboards to ask for more food. Two research assistants, both familiar to the dogs, sat at the left and right side of the laboratory, each with a keyboard in front of them on the floor. One of the assistants sat facing the dog and gazed at her without altering attention; the other one sat with his/her back turned to the keyboard and therefore with back turned to the dog (Figure 2a).

Eyes Visibility (EV): dogs could choose between two keyboards to ask for more food. Two research assistants, both being familiar to the dogs, were sitting on the left and right side of the laboratory, each with a keyboard in front of them, on the floor. One of the assistants was using a blindfold covering eyes and the other one had a blindfold on her forehead, so that the eyes were uncovered, and gazed constantly at the dog (Figure 2b).

The procedures for both conditions BO and EV were identical: a treat was placed on the floor in the middle of the room to stimulate the dog's desire for food (Figure 2). The main experimenter (C.S.) opened the laboratory's door and held the dog for 5 seconds before setting her free. After eating the treat available on the floor the dog could choose between one of the two keyboards to ask for more food. The trial ended when the dog chose one of the keyboards or when a one-minute period had elapsed without choice. The experimenter remained all the time next to the door observing the dog's choice. Trials (n=16 in each condition) were run on a one-per-day basis (as explained in the following general procedure); keyboards and assistant's positions (left/right) were randomly alternated throughout the trials, as well as their cues of attention (facing/back or blindfolded/ not blindfolded, respectively for body orientation and eye visibility conditions). The response was classified as a correct choice when the dog's first movement was in the direction of the keyboard in front of the facing (in BO condition) or non-blindfolded (in EV condition) assistant. Dogs were rewarded for the correct choices by the chosen assistant.

To avoid that a dog preferred one or another assistant (both being familiar to the dogs since the beginning of the study), they were oriented to not interact with the dogs.

Experimental conditions designed to explore the influence of human's visual access to the keyboard on the dog's communication

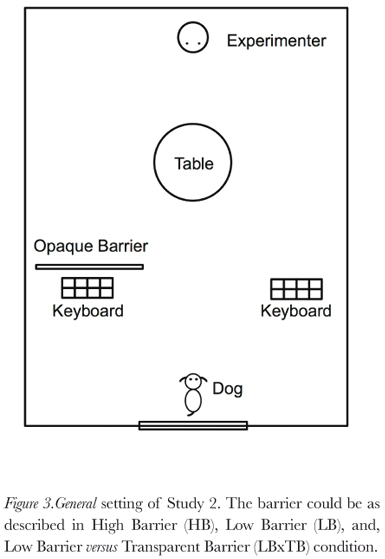

High Barrier (HB): the dogs could choose between two keyboards: one visible to the experimenter, the other one placed behind a high opaque barrier (60 cm width x 100 cm height, made of wood) that blocked the experimenter's view to the keyboard, and also suppressed visual contact between dog and experimenter during the key pressing.

Low Barrier (LB): the dogs could choose between two keyboards: one visible to the experimenter, the other one placed behind a low opaque barrier (60 cm width x 40 cm height, made of wood) that blocked the experimenter's view to the keyboard, but allowed visual contact with the dog during the key pressing.

Low Barrier versus Transparent Barrier (LBxTB): dogs could chose between two keyboards, one visible to the experimenter, placed behind an acrylic transparent barrier (60 cm width x 40 cm height), the edges of which were marked with a white strip to delimitate, the other one placed behind the low opaque barrier (60 cm width x 40 cm height).

The procedures for HB, LB and LBxTB conditions were identical: a table was placed in the middle of the room in order to discourage the dog to go directly to the experimenter without passing near a keyboard (Figure 3). For all three conditions, the experimenter led the dog to a position from which she could see both equidistant keyboards. After giving her a treat, she ordered the dog to "stay" and went to the other side of the laboratory, at a position also equidistant from two keyboards (one of them behind an opaque barrier and not visible to the experimenter). Then she verbally encouraged the dog to request for more food by saying "Sofia/ Laila, do you want more food? Ask for it on the keyboard", what they were used to doing. The trial ended when the dog approached one of the keyboards, or, when a one-minute period had elapsed without choice. During the trial, the experimenter maintained her gaze continuously at the dog to avoid giving any cue about which keyboard to choose, except for the few seconds when dogs chose the keyboard behind the high barrier, which cut their eye contact. Trials (n=16 in each condition) were run on a one-per day basis (as explained in the following general procedure), with keyboards (left/right) and barriers positions (left/right) randomly alternated across the trials. For these three conditions the response was classified as a correct choice when the first movement was in the direction of the visible keyboard for the experimenter. Dogs were rewarded for their correct choices by the experimenter.

A generalization phase for the situation with barriers was designed after running all previous conditions in order to explore Sofia's choice in scenarios different from those used in HB, LB and LBxTB conditions. Eleven additional trials were run only with Sofia, with the same procedure of these three conditions but changing the settings: 1) suppressing the table; 2) inverting the settings in the room; 3) using a backpack as a barrier, 4) using a chair covered by an opaque plastic as a barrier; 5) using a chair covered by a cloth as a barrier; 6) using a piece of cardboard as a barrier; 7) using a backpack as the opaque barrier versus transparent barrier; 8) using a chair covered by an opaque plastic as the opaque barrier x transparent barrier; 9) using a tree outside the lab as a barrier; and 2 more trials only replacing the main experimenter by two different assistants familiar to the dog.

General procedures

The BO, EV, HB and LB conditions were conducted concomitantly on a one-per-day basis in a random order. The LBxTB condition and generalization phase for the barrier conditions were run only after finishing the other conditions and only with Sofia because Laila had health problems and could not participate anymore in the study. The 16 trials (16 days of data collection) occurred with intervals of one or two days between trials. The strategy to run only one trial per day for each condition and, to randomize the sides of keyboards, barriers, experimenters, and the assistants' attention states intended to minimize the dependency between trials and to reduce the chance of an eventually incidental learning in one trial interfering in subsequent trials.

Statistical analysis

For each experimental condition, to evaluate whether the dog's choice was by chance or not (50%), a Binomial test was applied by assuming that the temporal distance between two trials was sufficiently large to avoid an influence of one choice on another. To test whether the dogs' choice was influenced by other factors, such as the side of the room (left/right) or two identical keyboards, the experimental conditions were joined and an approximation of Binomial to the Gaussian distribution was used. The significance level considered of all tests was 5%.

Results

In 6 trials, Sofia and Laila did not perform any choice because of no interest in the food, and therefore, these trials were not informative about the sensitivity of the dogs to the human's cues of attention or visual perspective to the keyboard, and were not included in the analysis.

Sofia took part in 80 trials (16 trials in each condition, BO, EV, HB, LB and LBxTB) and in only 2 of them, both in HB condition, she made no choice. When she did choose (78 trials), there was no preference for either side of the room (she chose the left side in 44.9% of all trials, p=0.365). Moreover there was no preference of a specific keyboard: she chose one of them in 43.6% of trials (p=0.258).

For Laila, in 4 out of 64 trials (16 trials in each condition, BO, EV, HB and LB) she did not make any choice, one in each condition BO, EV, HB and LB. When she did choose (60 trials), there was no preference for either side of the room (she chose the left side in 56.7% of all trials, p=0.312). Moreover there was no preference of a specific keyboard: she chose one of them in 48.3% of trials (p=0.796).

All results are presented in Table 1. For the BO condition, Laila consistently preferred to use the keyboard in front of the assistant in the facing position (p=0.035), however, Sofia chose by chance (p=0.210) in this condition. For the EV condition, Sofia and Laila chose by chance the keyboards in front of a blindfolded or non-blindfolded assistants (p=0.454 and p=0.302, respectively).

For HB condition, both Sofia and Laila consistently chose the keyboard that was visible to the experimenter (p=0.002 and p=0.007, respectively). In LB condition both Sofia and Laila also significantly chose the visible keyboard (p<0.001 and p=0.007, respectively). Moreover in LBxTB condition, Sofia preferentially chose the visible keyboard (p=0.004). For almost all variations in the settings, proposed in the generalization phase for barrier conditions, Sofia preferred the keyboard that was visible to the experimenter, this happened even in the more different setting in which a tree was used as a barrier in a garden. In just one trial Sofia chose the keyboard behind the barrier: the one in which a different person was in the place of the main experimenter.

It is important to point out that all first choices for HB and LB conditions were the visible keyboard for both dogs, as well as for LBxTB for Sofia. Learning across the experiment is always a possibility, but we did few repetitions per trial and one trial per day intending to decrease this possible effect.

It is important to note that in LB condition Sofia did not chose the opaque barrier in any trial, therefore it is not possible to argue that she learned to avoid this opaque barrier because of a negative reinforcement, which strengthens that the preference in the LBxTB condition (that was run after finishing other conditions) for the transparent barrier was not a learned avoidance of the low barrier in LB condition.

Discussion

The current study explores the sensitivity of two dogs to the human's cues of attention and human's visual perspective when they use a keyboard with arbitrary signs to communicate about a desired food. Previous studies addressed a similar question in different contexts such as forbidden actions, play or begging for food (Virányi et al. 2004; Gácsi et al. 2004; Call et al. 2003, Brauer et al. 2004; Kaminsky et al. 2009) and provided evidence about this ability in dogs, but none emphasized the dog as the producer of communicative behavior, which justified the question in the keyboard-using context.

The small number of subjects in the current study is explained by the fact that the use of the keyboard as a visual communicative tool involves a complex learning process. Moreover, the few repetitions for each condition also intended to decrease a possible learning effect during the experiment. Nevertheless, the knowledge gained with these case studies is also valuable since they show the potentiality of complex skills.

The results indicated that certain signs of human attention were not taken into account by the dogs while using the keyboard. Sofia and Laila chose by chance the keyboard positioned in front of the person with their eyes exposed (gazing at her) and another one positioned in front of a blindfolded person. Sofia also chose by chance the two keyboards in front of the facing or assistants with their back turned, although Laila, in this condition, showed preference for the keyboard in front of the facing assistant.

The results for eye visibility deserve careful evaluation. The use of the keyboard to communicate requires from the dog a "shared attention" for two outbreaks: (1) a focus on the keyboard itself, the dog should choose one of six arbitrary symbols related to her own desire, which requires a cognitive effort, and, (2) a second focus on the signs of attention of the receiver of the message. Therefore, eye visibility, a subtle cue, can be less relevant for the dog to discriminate.

The choice of a blindfold to manipulate eye visibility, as performed in this current study and by Gácsi et al. (2004) should be discussed on the basis of what this could represent for the dog. A blindfold on the human's face can incite curiosity or conflict in the dog since it was not experienced by the dog in their lives in contact with humans. Miklosi (2007) suggested that, in front of a person with a blindfold on the eyes, dogs usually react with some hesitation, an indication that the gaze is not being totally ignored. It is possible that this strangeness before a blindfolded person could also mask a possible discrimination of attention cues.

The history of training in the use of the keyboard, mostly for Sofia, could also have interfered in the results in BO and EV condition. The original keyboard employed for this training had, as already mentioned before, an electronic device that generated a sound of the experimenter's voice pronouncing the word corresponding to the lexigram every time the key was pressed. Although Rossi and Ades (2008) had shown that Sofia visually discriminates the lexigram and that she is perfectly capable of dismissing the auditory feedback, it is possible that an association had been established between typing, hearing the word pronounced, and, then, inducing the response of turning around from the human, which could have reduced the control of the human's visual signs of attention to the keyboard. Even though the dogs had being submitted to a familiarization phase with the silent keyboards, the long previous routine of using the sonorous keyboard may have impaired their perception of the human's attention to the keyboard, which may have been widespread for the experimental context (always performed with the silent keyboard). In other words, it may be difficult to dissociate the visual and sonorous features of the keyboard, which could have interfered in the results.

This interpretation arises the possibility that Sofia, Laila, and perhaps other dogs, realize the value of acoustic signals (vocalization, for example) to draw the attention of their human partner. Gaunet (2008), while comparing guide dogs for the blind people and pet dogs, prevented them from reaching the food that they had previously learned to locate. Guide dogs have produced (more than pet dogs) a new acoustic sign: they licked their mouths noisily, perhaps as a way of signaling their blind owners, who had never responded to visual communication. Therefore, it seems that guide dogs can learn to use acoustic signals to call their owner's attention, a behavior probably reinforced in the ontogeny. A similar process could have happened with the sonorous keyboard. More studies are necessary to compare the ability to perceive acoustic and visual signs in dogs, and to better understand the role of each modality of communication. For humans, vision is the most important perceptual ability, which is not true for dogs: they can rely on other perceptual cues as, for example, acoustic signals (Miklósi, 2007).

The most remarkable result in this research lies in the consistent choice of Sofia and Laila for the visible keyboard (from the point of view of the experimenter). Both Sofia and Laila preferred to use a keyboard that was in a region of potential visibility for the human. The barrier represented a break of the visibility of the keyboard in the surroundings. This could explain why it was easier for Sofia and Laila to assess the relationship between what was the potential visual field of the human than to pay attention to details on the face of the human while using the keyboard. Their perception that the keyboard needed to be in the visual field of human is evidence that they behaved as if they needed to be viewed using the keyboard to have a response of their request.

The difficulty with respect to the barrier is that the dog should assess the relative position of the barrier and the human in relation to the keyboard. There are two elements of the environment to be taken into account. However, being in another room, or, behind any physical barrier that the owner cannot see, is a natural situation and commonplace in the routine of the dog's life, they may be punished when they are seen and not punished when they are not seen, or, they may be rewarded when they are seen and not rewarded when they are not seen.

It is unlikely that the results could be ascribed to a "Clever Hans" effect or a bias due to an asymmetry of any position and the gaze of the experimenter. The experimenter was located precisely the same distance from both keyboards and she kept her eyes always fixed on Sofia and Laila except when they chose the high barrier that cut the visual contact between them. Results obtained in the three experimental conditions with barriers weaken alternative explanations and reinforce the interpretation that, the visible /non-visible dimension guided the choice of the two dogs.

The first alternative explanation would be that Sofia and Laila chose the visible keyboard in the high barrier condition just because the barrier could interrupt the visual contact between the dogs and the experimenter while using the keyboard. The low barrier eliminated this possible disruption of visual contact, and even so, did not modify the preference by the other side without a barrier. A second alternative explanation may be that the barrier would generate a small delay in the departure of Sofia and Laila to the experimenter, or, a small detour route. Although the path toward the experimenter after pressing the keyboard was always very fast and direct, over the course of experimental practices it occurred to us that, the dog could have her locomotion slightly deflected by account of the presence of the barrier or she could be bothered with the existence of a barrier as physical obstacle. The experiment performed with Sofia, with a transparent barrier (with a visible edge), challenged this possibility. The consistency of the Sofia's choice in condition LBxTB by the side of the transparent barrier suggested that her behavior was not being controlled by distinction barrier versus non-barrier, but by visibility itself. Finally, the generalization phase suggested that, for other kinds of barriers and settings, Sofia continued to prefer the visible option for the experimenter, which decreases the possibility that the choices in the HB, LB and LBxTB were guided by an avoidance of those specific opaque barriers.

These results show that Sofia and Laila, when using a keyboard to communicate, are sensitive to the human's visual access to it, this is similar to what was found by Brauer et al. (2004) and Kaminsky et al. (2009). However, in these studies, the dogs were not required to emit a communicative signal: they only had to perform a task. Sofia and Laila were able to learn to use a complex tool, the keyboard, to communicate their desires, and while using it they learned about the importance of the visual access of the recipient of the message to the keyboard. We minimized the possibility of learning during the experimental trials, using few repetitions, one or two day intervals between sessions, and randomizing the sides of the keyboard and barriers. The fact that Sofia, when exposed to situations that differed from the test in the generalization phase, almost always chose the keyboard visible to the experimenter emphasizes that she takes into account the experimenter's visual field, and not only responding to a previous trained set. On the other hand, even a reinforced situation may not be learned: the correct responses in BO and EV were rewarded, but there was no discriminative learning.

The keyboard is a visual communicative tool, so it is expected that the recipient's cues of attention and visual perspective would influence Sofia and Laila's behavior. There is a good agreement between Sofia's and Laila's results, even when considering individual differences. Laila apparently proved to be a little more sensitive than Sofia to the signs of human attention.

Conclusion

The results observed with the barriers are very consistent and brought up important features of communication by means of arbitrary signs. Our results show that Sofia and Laila are able to take into account the experimenter's visual field to emit a communicative visual signal.

Acknowledgments

Professor César Ades was the supervisor of this research and his contributions were essential. We thank Daniela Ramos for her careful reading of the manuscript and valuable suggestions and also Maria Maria M. Brandão, Thaís S. Domingues, Maria Angélica Honório for their assistance in running sessions. This study complies with current Brazilian laws regarding the use of animals in research. The authors declare that they have no conflict of interest.

References

Agnetta, B., Hare, B., & Tomasello, M. (2000).Cues to food location that domestic dogs (Canis familiaris) of different ages do and do not use. Animal Cognition, 3, 107–112. [ Links ]

Brauer, J., Call, J., & Tomasello, M. (2004). Visual perspective taking in dogs (Canis familiaris) in the presence of a barrier Applied Animal Behaviour Science, 88, 299-317. [ Links ]

Brauer, J., Keckelsen, M., Pitsch, A., Kaminsky, J., Call, J., & Tomasello M. (2012). Domestic dogs conceal auditory but not visual information from others. Animal Cognition. DOI 10.1007/s10071-012-0576-9. [ Links ]

Call, J., Brauer, J., Kaminsky, J., & Tomasello, M. (2003). Domestic dogs (Canis familiaris) are sensitive to the attentional state of humans. Journal of Comparative Psychology, 117, 257-263. [ Links ]

Dorey, N. R., Udell, M. A. R., & Wynne, C. D. L. (2010). When do domestic dogs, Canis familiaris, start to understand human pointing? The role of ontogeny in the development of interspecies communication. Animal Behaviour, 79, 37-41. [ Links ]

Gácsi, M., Miklósi, A., Varga, O., Topál, J., & Csányi, V. (2004). Are readers of our face readers of our minds? Dogs (Canis familiaris) show situation-dependent recognition of human's attention. Animal Cognition, 7, 144-153. [ Links ]

Gácsi, M., Mcgreevy, P., Kara, E.,& Miklósi, A. (2009). Effects of selection for cooperation and attention in dogs. Behaviour and Brain Function, 5-31. [ Links ]

Gaunet, F. (2008). How guide-dogs of blind owners and pet dogs of sighted owners (Canis familiaris) ask their owners for food? Animal Cognition, 11, 475-483. [ Links ]

Hare B., & Tomasello, M. (1999) Domestic dogs use human and conspecific social cues to locate hidden food. Journal of Comparative Psychology, 113-173. [ Links ]

Hare, B., Brown, M., Williamson, C., & Tomasello, M. (2002).The domestication of social cognition in dogs. Science, 298, 1634-1636. [ Links ]

Kaminsky, J.,Brauer, J., Call, J., & Tomasello, M. (2009). Domestic dogs are sensitive to human's perspective. Behaviour, 146: 979-998. [ Links ]

Kaminsky, J., Pitsch, A., & Tomasello, M. (2012). Dogs steal in the dark. Animal Cognition. DOI 10.1007/ s10071-012-0579-6. [ Links ]

Miklósi, A., Polgárdi, R., Topál, J., & Csányi, V. (1998). Use of experimenter-given cues in dogs. Animal Cognition, 1, 113-128. [ Links ]

Miklósi, A., Polgárdi, R., Topál, J., & Csányi, V. (2000). Intentional behaviour in dog-human communication: an experimental analysis of "showing" behaviour in the dog. Animal Cognition, 3, 159-166. [ Links ]

Miklósi, A., Kubinyi, E., Topál,J., Gácsi, M., Virányi, Z., & Csányi, V. (2003). A simple reason for a big difference: wolves do not look back at humans but dogs do. Current Biololy, 13, 763-766. [ Links ]

Miklósi, A., & Soproni, K. (2006). A comparative analysis of animals' understanding of the human pointing gesture. Animal Cognition, 9, 81-93. [ Links ]

Miklósi, A. (2007). Dog behaviour, Evolution and Cognition. Oxford: University press. [ Links ]

Ramos, D., & Ades, C. (2012). Two-Item Sentence Comprehension by a Dog (Canis familiaris). PLoS ONE, 7(2):e29689. doi:10.1371/journal.pone.0029689. [ Links ]

Range, F., Heucke, S. L., Gruber, C., Konz, A., Huber, L., & Viranyi, Z. (2009a). The effect of ostensive cues on dogs' performance in a manipulative social learning task. Applied Animal Behaviour Science, 120, 170–178. [ Links ]

Range, F., Horn, L., Bugnyar, T., Gajdon, G. K., & Huber, L. (2009b). Social attention in keas, dogs, and human children. Animal Cognition, 12, 181–192. [ Links ]

Riedel, J., Schumann, K., Kaminski, J., Call, J., & Tomasello, M. (2008).The early ontogeny of human-dog communication. Animal Behaviour, 75, 1003-1014. [ Links ]

Rossi, A. P., & Ades, C. (2008). A dog at the keyboard: using arbitrary signs to communicate requests. Animal Cognition, 11, 329-338. [ Links ]

Soproni, K., Miklósi, A., Topal, J., & Csányi, V. (2001). Comprehension of human communicative signs in pet dogs. Journal of Comparative Psychology, 115, 122-126. [ Links ]

Soproni, K.,Miklósi, A.,Topál, J., & Csányi, V. (2002). Dogs' (Canis familiaris) responsiveness to human pointing gestures. Journal of Comparative Psychology, 116, 27-34. [ Links ]

Téglás, E., Gergely, A., Kupán, K., Miklósi, Á., & Topál, J. (2012). Dogs' gaze following is tuned to human communicative signals. Current Biology, 22, 1-4. [ Links ]

Udell, A. R., & Wynne, C. D. L. (2010). Ontogeny and phylogeny: both are essential to human-sensitive behaviour in the genus Canis. Animal Behaviour, 79, e9– e14. [ Links ]

Virányi, Z., Topál, J., Gácsi, M., Miklósi, A., & Csányi, V. (2004). Dogs respond appropriately to cues of human's attentional focus. Behaviour Process, 66, 161- 172. [ Links ]

Virányi, Z.,Topál, J., Miklósi, A., & Csányi, V. (2006). A non-verbal test of knowledge attribution: a comparative study on dogs and children. Animal Cognition, 9, 13-26. [ Links ]

Wynne, C. D. L.,Udell, M. A. R.,& Lord, K. A. (2008). Ontogeny's impacts on human-dog communication. Animal Behaviour, 76, e1-e4. [ Links ]

1 (IN MEMORIAM)