Avaliação Psicológica

ISSN 1677-0471 ISSN 2175-3431

Aval. psicol. vol.21 no.4 Campinas out./dez. 2022

https://doi.org/10.15689/ap.2022.2104.24298.05

ARTICLES

Modeling Social Desirability in the Antisocial Self-Report (ASR-13) scores

Modelando a Desejabilidade Social na Escala de Auto-Relato de Comportamentos Anti-Sociais

Modelización de la Deseabilidad Social en los puntajes del Inventario Breve de Conductas Antisociales (ASR-13)

Nelson HauckI; Felipe ValentiniII

IUniversidade São Francisco - USF. https://orcid.org/0000-0003-0121-7079

IIUniversidade São Francisco - USF. https://orcid.org/0000-0002-0198-0958

ABSTRACT

The Antisocial Self-Report (ASR-13) was developed to assess a general antisocial personality factor. However, because antisocial traits are socially aversive, the ASR-13 scores can be potentially contaminated by social desirability in high-stakes testing situations. In the present study, we performed an in-depth analysis of the ASR-13 to determine which items may be subject to socially desirable responding when used for data collection in prison settings. Participants were 324 college students, and 20 male prisoners. A Multiple Indicator Multiple Cause (MIMIC) model suggested three items were especially prone to eliciting socially desirable responding. We found evidence that prisoners likely attenuated their scores when rating items with content that is related to illegal behaviors. We discuss the implications of the findings, and how they help understand the latent processes that cause item responses to the ASR-13 inventory.

Keywords: psychopathy; social desirability; response styles; faking; explanatory item response theory.

RESUMO

O Inventário Breve de Comportamentos Antissociais foi desenvolvido para avaliar um fator geral de personalidade antissocial. No entanto, como traços antissociais são socialmente aversivos, os escores do inventário podem acabar contaminados pela desejabilidade social em contextos de avaliação nos quais o indivíduo possui interesse em obter resultados favoráveis. No presente estudo, uma análise do ASR-13 foi realizada em relação a quais itens são suscetíveis às respostas socialmente desejáveis quando a coleta de dados é feita em ambientes prisionais. Os participantes foram 324 estudantes universitários e 20 homens privados de liberdade. O modelo Multiple Indicator Multiple Cause (MIMIC) sugeriu que três itens eram especialmente propensos a provocar respostas socialmente desejáveis. Evidências de que os participantes privados de liberdade atenuaram as suas pontuações em itens com conteúdo relacionado a comportamentos ilegais foram encontradas. Discutiu-se as implicações dos resultados e como ajudam a entender os processos latentes que causam respostas aos itens do ASR-13.

Palavras-chave: psicopatia; desejabilidade social; estilos de resposta; faking; teoria de resposta ao item explanatória.

RESUMEN

El Inventario Breve de Conductas Antisociales fue desarrollado para evaluar un factor general de personalidad antisocial. Sin embargo, como los rasgos antisociales son socialmente aversivos, los puntajes del inventario pueden verse contaminadas por la deseabilidad social en contextos de evaluación en los que el individuo está interesado en obtener resultados favorables. En el presente estudio, realizamos un análisis del ASR-13 en relación con qué ítems son susceptibles de respuestas socialmente deseables cuando la recolección de datos se realiza en entornos penitenciarios. Los participantes fueron 324 estudiantes universitarios y 20 hombres en situación de privación de libertad. El Modelo con Indicadores y Causas Múltiples (MIMIC) sugirió que tres ítems eras especialmente propensos a provocar respuestas socialmente deseables. Encontramos evidencias de que los participantes privados de libertad bajaron sus puntajes en ítems con contenido relacionado con conductas ilegales. Discutimos las implicaciones de los resultados y cómo ayudan a comprender los procesos latentes que provocan las respuestas a los ítems del ASR-13.

Palabras clave: psicopatía; deseabilidad social; estilos de respuesta; falsificación; teoría de respuesta al ítem explicativa.

Antisocial Personality Disorder and psychopathy are syndromes that, despite differences, share a core component that predisposes individuals toward a series of deviant and undesirable behaviors (Vaidyanathan et al., 2011). This broad antisocial trait can be described in terms of deceptiveness, antagonism, rule-breaking, and reckless or impulsive behavior, and it consists of a combination of low agreeableness and low conscientiousness (Decuyper et al., 2009). To assess this general antisocial factor, Hauck Filho, Salvador-Silva, and Teixeira (2014) developed a brief, 13-item self-report inventory, the Antisocial Self-Report (ASR-13). Using data from undergraduate students, the authors found an excellent fit for a unidimensional model, with all items exhibiting large factor loadings (≥ .63), and a quite high internal consistency, .92 according to both alpha and Guttman's lambda2 coefficients. One finding that merits attention is that the test information function for the 13 items revealed a better coverage at extreme levels of the latent variable. This suggested a potential utility of the instrument in the assessment of antisocial behaviors not only among community (or nonforensic samples of) adults, but also in forensic and prison samples, where individuals tend to have more pronounced antisocial personality traits. The present study represents an attempt to simultaneously test the feasibility of using the ASR-13 in prison settings and perform an in-depth analysis of the psychological processes that drive item responses to the instrument.

Despite the merits of self-report questionnaires, their resulting scores may suffer from a series of response bias, such stiles and sets (Primi et al., 2022). Response styles include the tendency of some respondents to paradoxically agree with both positively and negatively keyed items such as "talkative" and "quiet" (acquiescence) or to use the extreme anchors of the Likert scale (extreme responding) (Ziegler, 2015). Response sets, in turn, include response distortions that will occur, or increase, conditionally on the existence of incentives to perform well or bad, such as impression management during a job interview. All these examples have something in common. The key is that the test scores, which are expected to represent the test taker level on a given trait, result biased by other secondary cognitive and/or emotional processes (Primi et al., 2022).

Unsystematic biases, such as social desirability, are less predictable than systematic biases, as their impact on item responses result from the interaction of features of items, persons, and testing situations. From the perspective of items, two types of content can be distinguished that bear importance to understand why response distortions occur. While the primary intent of a self-report item is to capture information on a given latent trait of interest by including relevant descriptive content, not rarely item wording also contains negative or positive valence, i.e., evaluative content that might repel or attract responders (Bäckström et al., 2009). Whereas the descriptive content has to do with whether the item represents a low or a high trait level, the evaluative content is more connected to the use of trait descriptors that might be seen as "good" (desirable) or "bad" (undesirable) by the test respondents (Bäckström & Björklund, 2013; Peabody, 1967; Pettersson & Turkheimer, 2010b).

Even if some traits are, per se, more undesirable than desirable (or vice-versa), some analytical techniques can help separate trait-related from evaluative variance. A classical instance for breaking apart descriptive from evaluative content was presented by (Saucier et al., 2001). These authors discussed that personality descriptors such as cautious and bold differ from one another on a descriptive basis because they are located at opposite poles of a same trait (fearlessness); the same happens with timid and rash. Nevertheless, cautious and bold are positively evaluated-they sound desirable-, and timid and rash are negatively evaluated-they sound undesirable. Thus, both ends of a trait can be often represented using negatively and positively evaluated descriptors, despite very often one trait pole coincides with desirable features, and the other with undesirable behaviors, especially in the assessment of psychopathology (Pettersson et al., 2014). Including quadruplets as in the example above is an effective way to counterbalance evaluation and then minimize its influence on factor structure (Pettersson & Turkheimer, 2010a; Saucier et al., 2001). Another possibility is writing items avoiding both positive and negative evaluation (Bäckström et al., 2009, 2014; Bäckström & Björklund, 2013). The rationale is rather controlling for social desirability by removing evaluative content from the item statements.

However, these methods entail manipulations of item content that can only be implemented previously to the data collection, i.e., during the item writing step. Unfortunately, the development of the Antisocial Self-Report (ASR-13) included no consideration as to manipulating or minimizing the evaluative content in the item statements (Hauck Filho et al., 2014b). Given the nature of the indicators included in the instrument, they are likely negatively evaluated. They not only describe unsocialized behaviors, but are worded so that, in some testing situations, they might repel respondents that otherwise would agree with the item descriptive content. For instance, in item 9, How often do you make threats to others to get what you want?, "making threats" is a very negative way of referring to insistent/aggressive persuasion. This situation appears to happen with many other (if not all) items from the inventory. Hence, one implication is that the ASR-13 might not be appropriate for use in high stakes situations, when respondents typically manage to cause a "good" impression with their responses. One extreme example should be prison settings, because prisoners could be especially interested in attenuating their self-report of antisocial behavior (e.g., when applying for parole). Even if anonymity is assured during the data collection, these individuals can possess unjustified beliefs that responding sincerely could bring them more legal problems.

Therefore, in the present study, we performed an in-depth analysis of the ASR-13 in respect to which items are susceptible to socially desirable responding when the instrument is employed for data collection in prison settings. As discussed before, for controlling bias in instruments already developed, other methods must be applied (a priori methods such as quadruplets or evaluative neutralization are not useful in the case of the ASR-13). In this study, we approached the problem by combining experts' evaluations of social desirability (Peabody, 1967) with the multidimensional modeling of descriptive and evaluative variance (Pettersson et al., 2012). More specifically, we collected item ratings of social desirability from experts, and used the average of the expert's evaluations as a proxy to the item loadings on a social desirability factor. We also included independent samples of prisoners and general population adults, as social desirability might be higher for the further than the latter group. Our investigation has a narrow focus on the ASR-13, but also seek to refine the assessment of antisocial traits using self-report inventories in high stakes situations.

Method

Participants

Participants in the present study consisted of three independent samples. The first database included the 204 undergraduate students (mean age = 23.56 years; SD = 7.70; 60.6% female) from Hauck's et al. (2014) study. Data collection occurred collectively during classes, under standard paper-and-pencil procedures. The second database comprised a heterogenous community sample of 120 adults with ages from 18 to 56 years (mean age = 28.97 years; SD = 8.70; 60.83% male). Participants responded to items on an online Google Forms questionnaire, with invitations to participate in the study published on social networks. In turn, the third sample consisted of 20 male prisoners, from a Brazilian institution in the Southeast. Data were collected individually, in a quiet room, under the supervision of staff from the institution. Total sample included in the analyses was n = 344. All participants signed an informed consent prior to responding to questionnaires; they were informed about the research purposes, the anonymity of information provided, and the possibility of dropping out of the study at any moment.

Instrument

The ASR-13 (Hauck et al., 2014) is a self-report inventory that was originally developed to provide a brief assessment of a propensity toward antisocial behavior in research settings with community adult samples. Items tap on features such as conning of other people (e.g., How often do you lie to cause a good impression about yourself?), instrumental aggression (e.g., How often do you hurt other people to get something you want a lot?) and reactive aggression (How often do you react aggressively to teasing?). Each item is rated on a scale ranging from 1 = Never or almost never to 4 = Very often. Internal consistency (alpha coefficient) was .92 in the original dataset, .88 in the online community sample, and .90 in the prisoner sample.

Procedures

One focus of the present study was to inspect the evaluative content in the items from the ASR-13. To do that, we requested 20 judges (graduate students in Psychology) to rate each item on a scale ranging from 1 = extremely undesirable to 9 = extremely desirable. Averaging ratings across judges provides the researcher with an estimate of how desirable or undesirable the content of an item is (Bäckström et al., 2009; Bäckström & Björklund, 2013, 2016; Edwards, 1953). Item means close to 1 or 9 indicate the presence of evaluative content, what can elicit socially desirable responding from some individuals (Bäckström & Björklund, 2013). Hereafter, we call this information yielded by judges' ratings the "desirability index." Information obtained from this procedure is presented in Table 1. As expected, all items were rated by judges as having a strongly negative (undesirable) evaluative content. Mean desirability index was 2.07, an estimate that is very close to the lower bound of the scale. As no special attention was given to item wording regarding social desirability in Hauck's et al. (2013) study, item content in the ASR-13 resulted pejorative or negatively evaluated.

Data analysis

We were interested in several aspects relative to the potential effect of socially undesirable content on item endorsement, especially in the prisoner sample. In a preliminary step, we compared scores on the instrument between sample groups using ANOVA, and then explored to what extent item endorsement occurred guided by social desirability using linear regression. The ANOVA is included here because it is a simpler alternative way of analyzing our data and it helps understand the rationale behind the more complex analyses performed further, e.g., the MIMIC model.

Next, we relied on structural equation modeling (SEM) of the combined data from the three samples to get a deeper understanding of the latent processes driving item responses. As in this step we were interested in comparing prisoners versus non-prisoners, students and community adults were aggregated as a non-prisoner sample. As seen in Figure 1, we tested a series of four models with increasing complexity: 1) mean differences between sample groups (Model 1), 2) Multiple Indicators Multiple Cause (MIMIC; Model 2), 3) simple mediation (Model 3), and 4) mediated bifactor (Model 4). Each model tested a distinct causal hypothesis concerning the data in hand, and required specific constraints to be identified, as following described.

Model 1 represents a simple mean differences hypothesis: Sample group (prisoner versus non-prisoner) explains mean differences on the latent factor (F) that, in turn, accounts for the common variance shared by the indicators. This model is naïve in the sense that it assumes that all differences in item endorsement in both samples arise from true mean differences on the latent factor assessed by the ASR-13. The relative advantage of this model over the ANOVA comparison is the controlling of error variance, which might attenuate (or increase) group differences.

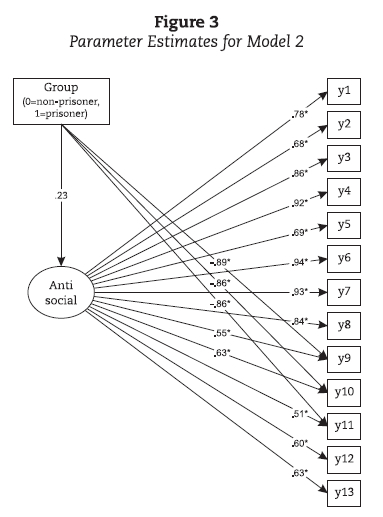

Model 2 represents a differential item functioning hypothesis: Besides the effect hypothesized in Model 1, Model 2 tests the existence of DIF, i.e., if prisoners and non-prisoners react in a different manner when endorsing items, irrespective of their latent scores (in Figure 1, item y3 functions differentially according to sample group). Based on an assessment of content, we theorized that items that might implicate the commitment of potentially illegal acts would be more susceptible to DIF in a prison setting. Our main candidates were items 9 (Make threats to others to get what you want?), 10 (Use false names to avoid being caught for something you did or plan to do?), and 11 (Vandalize?). To achieve identification, the path from sample group to the antisocial latent factor was constrained to 1 in Models 2 and 3 (this parameter is subsequently estimated during the iteration steps). As estimating direct paths from the sample variable to the factor and to all the 13 items would result in the model to be non-identified, we constrained the path from the sample group variable to the latent factor to 1. We tested the significance of direct paths from sample group to items 9, 10 and 11, and inspected modification indices for other potential direct paths.

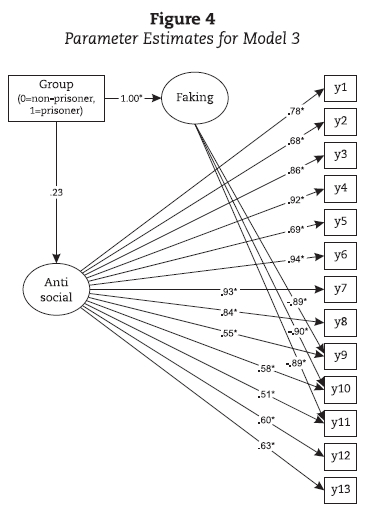

Model 3 hypothesizes about the cause of the DIF tested in Model 2. The main idea is that the testing situation under which prisoners responded to items might have activated a faking tendency that operated suppressing item scores. A faking factor ("FK" in Figure 1) is therefore theorized to act as a mediator variable, carrying out the effect from sample group to items. This mediated effect represents the interplay between situation × person × item features in explaining socially desirable responding (Bäckström & Björklund, 2013; Ziegler & Buehner, 2015). Once again, key indicators for this faking factor were hypothesized to be items 9, 10 and 11. Modification indices were also inspected for additional indicators. We constrained the faking factor to be orthogonal to the antisocial factor for the sake of model identification, but also because our hypothesis we believe faking will tend to affect those three items in a prison setting relatively independent of the antisocial level of an individual.

Model 4 is a more complex version of Model 3, with the difference that it controls for evaluative variance in the 13 items. This is done to better recover both the true factor loadings of items on the antisocial factor, and the regression parameter from group to the antisocial factor. Loadings on the evaluative factor ("Va" in Figure 1) were proportional weights derived from the desirability index described in Table 1. The desirability index of each item was weighted by subtracting 5 and then dividing it by 4. This procedure converts original values to a scale from −1 to 1 (the same as factor loadings), where values closest to −1 indicate extremely undesirable content, and values closest to 1 indicate extremely desirable content. Proportional constraints used as loadings for items 1 to 13 on the evaluative factor were as follows: −.84, −.53, −.77, −.88, −.51, −.96, −.88, −.70, −.68, −.83, −.89, −.49, and −.53. Our strategy simplifies the procedures implemented by other researchers under similar circumstances (Peabody, 1967; E Pettersson et al., 2014). Fit indices are crucial in appreciating whether these theoretically derived loadings indeed fit the data. Model 4 also test the direct path between group and evaluative factor.

Given the categorical ordered nature of the Likert-type indicators, we estimated each model's parameters from the polychoric correlation matrix of items employing robust least squares estimator (Weight Least Squares Mean- and Variance adjusted). Goodness of fit was inspected using the chi-square test, the Confirmatory Fit Index (CFI; should be greater than .95), the Tuker Lewis Index (TLI; should be greater than .95), and the Root Mean Square Error of Approximation (RMSEA; should be smaller than .08). Analysis were conducted using R and Mplus 7.11 (Muthén & Muthén, 2017).

Results

We performed a between-sample mean comparison on the sum score of the 13 items from the ASR-13. Estimated means for university students, community adults and prisoners were, respectively, 18.42 (SD = 6.54), 15.38 (SD = 2.05), and 15.45 (SD = 4.66). The ANOVA indicated a significant mean difference between samples, F(2, 341) = 13.47, p < .001, and the Tukey post hoc test revealed that students scored significantly higher than community adults (p < .001), a finding that is not surprising given that youths tend to be more antisocial than adults (Moffitt, 1993). However, prisoners had means significantly lower than university students (p = .046), and non-significantly different from community adults (p = .999). When aggregating students and community adults, non-prisoners had a non-significantly higher mean when compared to prisoners, p = .146, d = .36. Obviously, these results are unexpected and difficult to trust, as they imply that prisoners are less antisocial than typical university students and adults from the general population. One possible explanation is that, for some reason, prisoners attenuated that score when rating items, which resulted in the masking of true mean differences between sample groups. We turn to this possibility next.

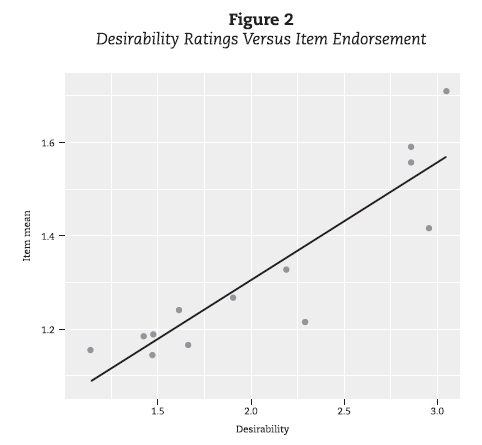

If evaluative content is a cause for item responses, then we should find a linear relationship between both variables. To test this hypothesis, we calculated the empirical mean of each item in the aggregated sample, and then regressed it on the desirability index from Table 1. Interestingly, desirability accounted for 81.5% of variance in item means, F(1, 11) = 48.47, p < .001, β = .90. The linear relationship between these variables is depicted in Figure 2. The strong association found indicates that the lower the desirability, the lower the endorsement of an item from the ASR-13. As suspected, evaluative content in the items played a salient role in explaining item responses. In the next step, we employed SEM to further explore the latent processes driving item response.

The next step was the use of SEModeling to better understand the processes underlying item responses. Results from the analyses can be found in Table 2. First, we tested Model 1, the naïve hypothesis that prisoners differ in how antisocial they are compared to non-prisoners, but that both samples tend to behave the same way when rating items-i.e., social desirability has no higher value in one sample relative to another. Results indicated a poor fit to the data. Replicating the untrustworthy pattern from the simple mean comparison, the analysis indicated prisoners as having a lower antisocial latent mean as compared to non-prisoners (standardized regression coefficient of latent factor on sample = −.53, p < .001).

We then tested our Model 2, that accounts for both true differences and DIF in the items from the instrument across samples. Despite the large chi-square, this model yielded a reasonable approximate fit to the data, and strikingly, had the regression coefficient from group to antisocial factor converging at .23. Then, controlling for DIF, the relation between group and antisocial factor switched from negative to positive. This specific result indicated that controlling for DIF partially removed the bias in the comparison of latent means, consistently pointing to prisoners as more antisocial than non-prisoners. As hypothesized, items 9, 10 and 11 presented a large DIF, as indicated by the size of the direct effect from group to items. Parameter estimates for Model 2 are presented in Figure 3. Results of the Model 3 (Figure 4) were identical to the Model 2, showing the additional latent factor can capture grouping and faking. In Model 4 (Figure 5), the additional factor captures the general evaluative content of the items. The direct path from the evaluative factor and group was significative (.21; p < 0.01), however it was a weaker effect comparing with those specific for the items 9, 10 and 11. Model 4 shows evidence the group of prisoners tend to show more social desirability only for specific items.

Discussion and conclusion

The present study consisted of an investigation of evaluative content in the items from the ASR-13, and an analysis of the role played by the context in explaining item responses when the instrument is used for research purposes in prison settings. As expected, all items were rated as negative valenced. Although at a first glance this result seems to undermine the validity of self-report data gathered by the ASR-13, it very much resembled the findings of (Edwards, 1953), who found an association of r = .87 between evaluation and endorsement of typical personality items by university students. A series of other studies have found as well that people in general are very reactive to content valence in items from personality inventories (Bäckström & Björklund, 2013; Borkenau & Ostendorf, 1989; Saucier et al., 2001). Having neutral items or items balanced for evaluation would represent an advantage relative to the current version of the ASR-13, but still this alone does not hamper the use of the tool for research purposes.

The SEModeling indicated three items that were predominantly predicted by the sample type rather than by the latent factor that explained most of the variance in the remaining items. Surprisingly, such items (9, 10, and 11) were not the ones rated as portraying the least desirable traits by the judges in this study (see Table 1). Instead, items with DIF describe behaviors that might implicate the commitment of illicit violations of social norms. One interpretation is that prisoners adopted a very particular defensive strategy when responding to items, partially guided by evaluative content, but mostly guided by whether endorsing items would represent confessing the involvement in past or current (perhaps still unknown) illicit activities.

This finding rejects a simplistic explanation that prisoners in the present study were more sensitive than students to item social desirability. Prisoners likely attenuated their scores when rating items, but faking was larger to items with content that is related to behaviors that an individual to be imprisoned, irrespective of social desirability. In brief, we found evidence that 1) prisoners were just a little more sensitive to social desirability than students and community adults under anonymous testing situations, but 2) prisoners attended to whether rating specific items could reveal the commitment of illegal acts. The unreliable mean differences we found were likely the result of these combined biases. One should not discard the possibility that prisoners from the present sample, despite agreeing to the informed consent, remained suspicious about the nature of the study and the consequences of honestly answering the research questionnaire. Nevertheless, even if correct, this DIF effect should not be uncritically generalized to other prison samples, given this could instead reflect a situation specific to the institution where the data were collected. Further research is necessary to establish if the effect replicates with data from other institutions. Our recommendation is that, until more research information is available, researchers should be skeptical about using items 9, 10, and 11 to perform (correlational or mean comparison) analysis when dealing with data collected from prisoners. As the present study reports a large DIF for these items, it is precipitated to assume they have the same discrimination across non-forensic and forensic samples.

Besides reporting on a psychometric investigation of the ASR-13, the present study sheds light to the complexity of response processes that underlie item responses. Traditional item response models such as item factor analysis (Wirth & Edwards, 2007) and item response theory (Embretson & Reise, 2000) assume that indicators are independent conditional on the latent variable. We cannot deny that all these techniques are invaluable tools, and responsible for many advances in psychological theory and testing. However, from a realist approach, the modeling of psychological data should take into account all relevant item, person, and testing influences that play a role in the processes that generate item response. This entails thinking beyond the standard factor-indicators relationship to seek a better understanding of what happens when respondents take a test. (Bornstein, 2011) process-focused account of test validity calls the attention of researchers to the fact that not only the cognitive processes might vary according to the nature of indicators included in the test, but also many contextual variables impact on item responses-including assessment setting, instructional sets, affective states, and the examiner behavior. Only by attending to all these influences on test scores can Psychology fully derives a true item response theory.

The present report has some limitations as well. The most important one is the small sample size of prisoners employed in the DIF analysis using the MIMIC model. However, MIMIC modeling is robust to unbalanced samples (Jamali et al., 2017). Furthermore, the DIF effects found here cannot be generalized to other prison samples before more studies have replicated them. We encourage other researchers to report similar analyses with their own data, what would be of help to establishing the boundaries of the reasonable use of the ASR-13. Another shortcoming is that we failed to include other external variables such as sex in the MIMIC model because all prisoners were men. Such analysis could indicate if modeling the separate components of item response can indeed yield more reliable correlations with external criteria.

Acknowledgments

There are no mentions.

Funding

This research did not receive any funding source, being funded with resources from the authors themselves.

Authors' contributions

We declare that all authors participated in the preparation of the manuscript. Specifically, the author Nelson Hauck developed the project, collected data and participated in the writing and data analysis of the work. The author Felipe Valentini participated in the writing and data analysis of the work.

Availability of data and materials

All data and syntax generated and analyzed during this research will be treated with complete confidentiality due to the Ethics Committee for Research in Human Beings requirements. However, the dataset and syntax that support the conclusions of this article are available upon reasonable request to the principal author of the study.

Competing interests

The authors declare that there are no conflicts of interest.

References

Bäckström, M., & Björklund, F. (2013). Social desirability in personality inventories: symptoms, diagnosis and prescribed cure. Scandinavian Journal of Psychology, 54(2), 152-159. https://doi.org/10.1111/sjop.12015 [ Links ]

Bäckström, M., & Björklund, F. (2016). Is the general factor of personality based on evaluative responding? Experimental manipulation of item-popularity in personality inventories. Personality and Individual Differences, 96, 31-35. https://doi.org/https://doi.org/10.1016/j.paid.2016.02.058 [ Links ]

Bäckström, M., Björklund, F., & Larsson, M. R. (2009). Five-factor inventories have a major general factor related to social desirability which can be reduced by framing items neutrally. Journal of Research in Personality, 43(3), 335-344. https://doi.org/10.1016/j.jrp.2008.12.013 [ Links ]

Bäckström, M., Björklund, F., & Larsson, M. R. (2014). Criterion Validity is Maintained When Items are Evaluatively Neutralized: Evidence from a Full-scale Five-factor Model Inventory. European Journal of Personality, 28(6), 620-633. https://doi.org/10.1002/per.1960 [ Links ]

Borkenau, P., & Ostendorf, F. (1989). Descriptive consistency and social desirability in self-and peer reports. European Journal of Personality, 3(1), 31-45. https://doi.org/10.1002/per.2410030105 [ Links ]

Bornstein, R. F. (2011). Toward a process-focused model of test score validity: Improving psychological assessment in science and practice. Psychological Assessment, 23(2), 532-544. https://doi.org/10.1037/a0022402 [ Links ]

Decuyper, M., de Pauw, S., de Fruyt, F., de Bolle, M., & de Clercq, B. J. (2009). A meta-analysis of psychopathy-, antisocial PD- and FFM associations. European Journal of Personality, 23(7), 531-565. https://doi.org/10.1002/per.729 [ Links ]

Edwards, A. L. (1953). The relationship between the judged desirability of a trait and the probability that the trait will be endorsed. Journal of Applied Psychology, 37(2), 90-93. https://doi.org/https://doi.org/10.1037/h0058073 [ Links ]

Embretson, S. E., & Reise, S. P. (2000). Item response theory for psychologists. Lawrence Earlbaum Associates, Publishers. [ Links ]

Hauck Filho, N., Salvador-Silva, R., & Teixeira, M. A. (2014a). Análise de Teoria de Resposta ao Item de um instrumento breve de avaliação de comportamentos antissociais. Psico (PUCRS), 45(1), 120-125. [ Links ]

Hauck Filho, N., Salvador-Silva, R., & Teixeira, M. A. (2014b). Análise de Teoria de Resposta ao Item de um instrumento breve de avaliação de comportamentos antissociais. Psico (PUCRS), 45(1), 120-125. [ Links ]

Jamali, J., Ayatollahi, S. M. T., & Jafari, P. (2017). The effect of small sample size on measurement equivalence of psychometric questionnaires in MIMIC model: A simulation study. BioMed Research International, e.7596101. https://doi.org/10.1155/2017/7596101 [ Links ]

Moffitt, T. E. (1993). Adolescence-limited and life-course-persistent antisocial behavior: a developmental taxonomy. Psychological Review, 100(4), 674-701. [ Links ]

Muthén, L. K., & Muthén, B. O. (2017). Mplus User's Guide (8th ed.). Muthén & Muthén. [ Links ]

Peabody, D. (1967). Trait inferences: Evaluative and descriptive aspects. Ournal of Personality and Social Psychology1, 7(4, Pt.2), 1-18. https://doi.org/https://doi.org/10.1037/h0025230 [ Links ]

Pettersson, E., Mendle, J., Turkheimer, E., Horn, E. E., Ford, D. C., Simms, L. J., & Clark, L. A. (2014). Do maladaptive behaviors exist at one or both ends of personality traits? Psychological Assessment, 26(2), 433-446. https://doi.org/10.1037/a0035587 [ Links ]

Pettersson, E., & Turkheimer, E. (2010a). Item Selection, Evaluation, and Simple Structure in Personality Data. Journal of Research in Personality, 44(4), 407-420. https://doi.org/10.1016/j.jrp.2010.03.002 [ Links ]

Pettersson, E., & Turkheimer, E. (2010b). Item Selection, Evaluation, and Simple Structure in Personality Data. Journal of Research in Personality, 44(4), 407-420. https://doi.org/10.1016/j.jrp.2010.03.002 [ Links ]

Pettersson, E., Turkheimer, E., Horn, E. E., & Menatti, A. R. (2012). The General Factor of Personality and Evaluation. European Journal of Personality, 26(3), 292-302. https://doi.org/10.1002/per.839 [ Links ]

Primi, R., Hauck-Filho, N., & Valentini, F. (2022). Self-report and Observer Ratings: Item Types, Measurement Challenges, and Techniques of Scoring. In: J. Burrus, S. H. Rikook, M. W. Brenneman (eds.). Assessing Competencies for Social and Emotional Learning: Conceptualization, Development, and Applications (pp. 99-116). Routledge. [ Links ]

Saucier, G., Ostendorf, F., & Peabody, D. (2001). The non-evaluative circumplex of personality adjectives. Journal of Personality, 69(4). https://doi.org/10.1111/1467-6494.694155 [ Links ]

Vaidyanathan, U., Hall, J. R., Patrick, C. J., & Bernat, E. M. (2011). Clarifying the role of defensive reactivity deficits in psychopathy and antisocial personality using startle reflex methodology. Journal of Abnormal Psychology, 120(1), 253-258. https://doi.org/10.1037/a0021224 [ Links ]

Wirth, R. J., & Edwards, M. C. (2007). Item factor analysis: current approaches and future directions. Psychological Methods, 12(1), 58-79. https://doi.org/10.1037/1082-989X.12.1.58 [ Links ]

Ziegler, M. (2015). "F*** You, I Won't Do What You Told Me!" - Response Biases as Threats to Psychological Assessment. European Journal of Psychological Assessment, 31(3), 153-158. https://doi.org/10.1027/1015-5759/a000292 [ Links ]

Ziegler, M., & Buehner, M. (2015). Modeling Socially Desirable Responding and Its Effects. Educational and Psychological Measurement, 548-565. [ Links ]

Correspondence:

Correspondence:

Nelson Hauck

Universidade São Francisco, Mestrado em Psicologia.

R. Waldemar César da Silveira, 105 - Jardim Cura D'ars

Campinas - SP, 13045-510

E-mail: hauck.nf@gmail.com

Received in August 2022

Accepted in November 2022

Note about the authors

Nelson Hauck is a psychologist (UFSM), PhD in Psychology from the Federal University of Rio Grande do Sul (UFRGS). Currently, he is a Professor at the Stricto Sensu Graduate Program in Psychology at the São Francisco University, Campinas campus.

Felipe Valentini is a psychologist (Unisinos), PhD in Psychology from the University of Brasília (UnB). Currently, he is a Professor at the Stricto Sensu Graduate Program in Psychology at the São Francisco University, Campinas campus.