Serviços Personalizados

artigo

Indicadores

Compartilhar

Universitas Psychologica

versão impressa ISSN 1657-9267

Univ. Psychol. vol.9 no.2 Bogotá ago. 2009

Global Psychology: a bibliometric analysis of Web of Science Publications*

La psicología a nivel mundial: un análisis bibliométrico de las publicaciones en la Web of Science

José Navarrete-CortesI**; Juan Antonio Fernández-LópezII***; Alfonso López-BaenaIII****; Raúl Quevedo-BlascoIV*****; Guaberto Buela-CasalIV

IUniversity of Jaén, Spain

IISystem of Scientific Information of Andalusia, Seville, Spain

IIIAndalusian Agency of Evaluation, Córdoba, Spain

IVUniversity of Granada, Spain

ABSTRACT

In this study, we carried a classification by country based on the analysis of the scientific production of psychology journals. We analyzed a total of 108,741 documents, published in the Web of Science. The indicators used were the Weighted Impact Factor, the Relative Impact Factor, the Citation Rate per article and the articles published in the top five journals of the Journal Citation Report (JCR). The results indicate that Spain has the highest percentage of articles in the top five journals in the JCR and Colombia is the second latin american, Spanish - speaking country that has more citations per article. Countries like Hungary, Italy and USA, had a higher Impact Factor and Citation Rate.

Keywords author: Descriptive study through document analysis, Scientific productivity, Bibliometrics indicators, Country ranking.

Keywords plus: Scientific productivity, Bibliometrics indicators, Psychology.

RESUMEN

En el siguiente trabajo se realizó una clasificación por países, atendiendo al análisis de la producción científica de las revistas de psicología. Se analizaron un total de 108.741 documentos, publicados en la Web of Science. Los indicadores empleados fueron el Factor de Impacto Ponderado, el Factor de Impacto Relativo, la Tasa de Citas por artículo y artículos publicados en las cinco primeras revistas de Journal Citación Report (JCR). Los resultados indican que España posee el mayor porcentaje de artículos en las primeras cinco revistas del JCR, y Colombia es el segundo país latinoamericano de lengua castellana que tiene más citas por artículo. Países como Hungría, Italia y EE.UU, poseían un mayor Factor de Impacto y Tasa de Citas.

Palabras clave autor: Estudio descriptivo mediante análisis de documentos, Productividad científica, Indicadores bibliométricos, Ranking de países.

Palabras clave descriptores: Productividad científica, Indicadores bibliométricos, Psicología.

There is considerable interest in the analysis of investigation publications generally, specifically in the field of psychology. Proof of this can be found in the numerous studies carried out in this area (Bignami et al., 2000; Fava & Montonary, 1997; Fava, Ottolini & Sonino, 2001; Rahman & Fukui, 2000; Rivera-Garzón, 2008; Thompson, 1999). Nowadays it is necessary to analyze both the quality and quantity of the scientific investigation carried out in numerous areas and propose methodological guidance for university evaluation (Muñiz & Fonseca-Pedrero, 2008).

As a result, several rankings have been developed considering the quality and productivity of universities (Molinari & Molinari, 2008), both on an international level (see, Buela-Casal, Gutiérrez, Bermúdez, & Vadillo, 2007; Buela-Casal et al., 2009a; Pagani et al., 2006), and in Spain (see, Buela-Casal, Bermúdez, Sierra, Quevedo-Blasco, & Castro,2009,2010),and characteristic features have even been studied according to the area of knowledge(Fainholc, 2006).An example is the Ranking of World Universities (Institute of Higher Education, Shangai Jiao Tong University, 2008), in which universities are ranked according to the results (through articles in journals in the Web of Science) of the different universities on a global level. Rankings of Spanish universities have also been created according to the mobility of teachers and students in Doctorate Programs (Castro & Buela-Casal, 2008; Gil Roales-Nieto, 2009).

Within the higher education field, both the quality (Buela-Casal & Castro, 2008a; González, Macías, Rodríguez & Aguilera, 2009) and evaluation (Bengoetxea & Arteaga-Ortiz, 2009) of postgraduate degrees, as well as their productivity (Musi-Lechuga, Olivas-Ávila & Buela-Casal, 2009), have been the object of study, including even doctoral thesis (Moyano, Delgado & Buela- Casal, 2006). It is especially important to point out that one of the most important indicators in evaluating the quality of postgraduate degrees are publications in Web of Science (WoS) journals (Bermúdez, Castro, Sierra & Buela-Casal, 2009; Buela-Casal & Castro, 2008b). Another aspect that is often evaluated, especially with regards to productivity, are university teaching staff (Hirsch, 2005; Musi-Lechuga, Olivas-Ávila, Portillo-Reyes & Villalobos-Galvis, 2005; Sierra, Buela-Casal, Bermúdez & Santos, 2009a). Because of this several studies have been carried out regarding the criteria and evaluation process of staff (Sierra, Buela- Casal, Bermúdez & Santos-Iglesias, 2008; Viñolas, Aguado, Josa, Villegas & Fernández-Prada, 2009), the accreditation of university teaching staff (Alzate-Medina, 2008; Buela-Casal, 2007a, 2007b; Sierra, Buela-Casal, Bermúdez & Santos-Iglesias, 2009b), and the accreditation of permanent teachers and university professors (Buela-Casal & Sierra, 2007). Even training (Alegre & Villar, 2007) and teaching (García-Berro, Dapia, Amblàs, Bugeda & Roca, 2009), university departments (Roca, Villegas, Viñolas, García-Tornel & Aguado, 2008), the relation between financing and the quality of universities (Osuna, 2009), the technologies of the information and the communication (Buela-Casal & Castro, 2009), and the implementation of ISO standards have been evaluated in order to ascertain university quality (Del Río, 2008).

Within this process, scientific journals have become the principal method of communication in the investigation field, which is why they have become so important. Scientific productivity can be measured using several different bibliometric indicators (García-Martínez, Guerrero-Bote, Hassan-Montero & Moya-Anegón, 2008; Moya, 2004; Rueda-Clausen, Villa-Roel & Rueda-Clausen, 2005), but the main point of reference is the Impact Factor (IF) (Fava & Ottolini, 2000; Garfield, 2003), although at the moment this having relevance H-index (see, Csajbók, Berhidi, Vasas & Schubert, 2007; Egghe & Rao, 2008; Pires da Luz et al., 2008; Schubert & Glänzel, 2007; Tol, 2008). What was once a tool developed with a merely bibliographic objective, has been used mainly as an evaluator (Ruiz-Pérez, Delgado & Jiménez- Contreras, 2006).

In the analysis of journals, the Impact Factor (IF) is one of the most widespread indicators, although it has received criticism (Bordons, Fernández & Gómez, 2002; Fava & Ottolini, 2000; Seglen, 1997). Opinions regarding evaluation systems in the investigation field, and specifically for articles and scientific journals, are increasingly more frequent. While a priori it allows for the comparison of journals, it is also used to evaluate the quality of individual scientific publications (Opthog, 1997). Buela-Casal (2003) highlights one of the main disadvantages of using the IF with this objective. In this way an article is considered of a high quality based on the "impact" or "prestige" of the journal in which it was published and therefore, the conclusions acquired from this indicator are on most occasions incorrect. A series of intrinsic limitations also arise, such as the fact that it is entirely based on citations from a two-year period and that the "impact" or "prestige" of where the citations appear is not taken into account upon evaluation (Buela-Casal, 2007b). Likewise, authors such as Bordons and Zulueta (1999) consider that it is an indicator that specifically measures the visibility and diffusion of the articles published in these journals, rather than their scientific quality. It is also important to note that there are studies that evaluate how the type of article published in journals influences their impact factor (Buela-Casal et al., 2009b). Pelechano (2002) also noted that what was once a set method to understand the impact of scientific publications, without actually having to read them, is now the most widespread method of evaluating scientific contributions. Based on this criticism, Sternberg (2001) remarks that not everything that is published in the same journal is of the same quality.

Another important factor is that the IF of journals is generally related to their internationality. This is why several different authors have studied this internationality index (Buela-Casal, Perakakis, Taylor & Checa, 2006; Buela-Casal, Zych, Sierra & Bermúdez, 2007; Villalobos-Galvis & Puertas- Campanario, 2007; Zych & Buela-Casal, 2007; (2009). Another variable that largely affects the IF is how temporary citations are, that is, while in certain fields recent articles are cited, in other fields the articles that are generally cited are ten years old (Gómez & Bordons, 1996). As a result, it is often an incorrect index of investigation quality and cannot substitute the analysis of real citations to comparate both people (Bignami et al., 2000), and countries (Fava & Montonary, 1997). Despite the high level of criticism that this indicator has received (Buela- Casal, 2003; Garfield, 2003), there is no other evaluation system that is so widely accepted by the scientific community and academic institutions.

In this article global scientific productivity will be analyzed on a quality level in the psychology field, using the Web of Science (WoS) database, from 1999 to 2004.

Method

Units of analysis

All countries with at least 60 articles published in the Web of Science (WoS) in any of the 11 psychology categories considered by the Journal Citation Report (JCR) (see Table 1) between 1999 and 2004.

Each country's articles were analyzed using the indicators shown in Table 2 (see, Moed, Bruin & Van Leeuwen, 1995; Moya, 2004; Rueda-Clausen et al., 2005; Van Raan, 1999). A quantitative indicator was also used, such as the number of publications of each country, with the aim of obtaining a criterion for forming the definitive simple and classifying the countries (see Table 2).

Materials

– Data from the Web of Science (WoS). The database used was the Social Sciences Citation Index (SSCI).

– Information relating to journals covered by the Institute for Scientific Information (ISI) during a period of six years, contained in the Journal Citation Report (JCR) database.

Design and procedure

It is a descriptive study of document analysis, according to the classification proposed by Montero and León (2007). In the development and writing the rules proposed by Ramos-Álvarez, Moreno- Fernández, Valdés-Conroy & Catena(2008) have been followed as well as the Berlin Principles on Ranking of Higher Education Institutions ( International Ranking Expert Group, 2006).

The main objective of the study is to analyze psychology productivity on a quality level, measured in WoS journals (through the SSCI). In order to ensure that the main journals have been checked, relevant information has been drawn from the research community, through the JCR database between 1999 and 2004. Using the aforementioned materials, documents were collected from the 470 journals included in the 11 psychology categories considered by the JCR (see Table 1), throughout the six-year period. Only documents classed as "Article" or "Review" were selected, and countries that published less than 60 articles in the period 1999-2004 were not included in the study.

An analysis of citations from journals that could be considered of scientific excellence was performed, using as criterion their position during the six-year period within the top ten of all categories analyzed (Bordons et al., 2002). The first five journals of the JCR during any year of the study were registered and named "top 5". In order to facilitate the collection, analysis and interpretation of the data obtained in the WoS, a database was designed in Microsoft Access 2003.

Results

A total of 109,302 documents were found, of which only 108,741 were analyzed as they were from countries that produced more than 60 articles during the period 1999-2004, therefore excluding countries such as Yugoslavia, Egypt, and Cuba. In total, 103,552 were original articles and 5,186 were reviews. The sample was formed by a total of forty five countries worldwide. In Table 3 the total number of articles (Ndoc), the number of citations per article (Ncit/doc), and the number of articles in the first five journals of the JCR can be seen for each of the countries that make up the sample, after having applied the analysis described in the Method section. Countries were organized according to number of articles, the first three being the United States, England, and Canada respectively.

Attending to the countries of Latin-America, only five of them (see the Table 4) meet between the countries more number of citations per article, being Argentina and Colombia the countries of Spanish-speaking with major rate of citations.

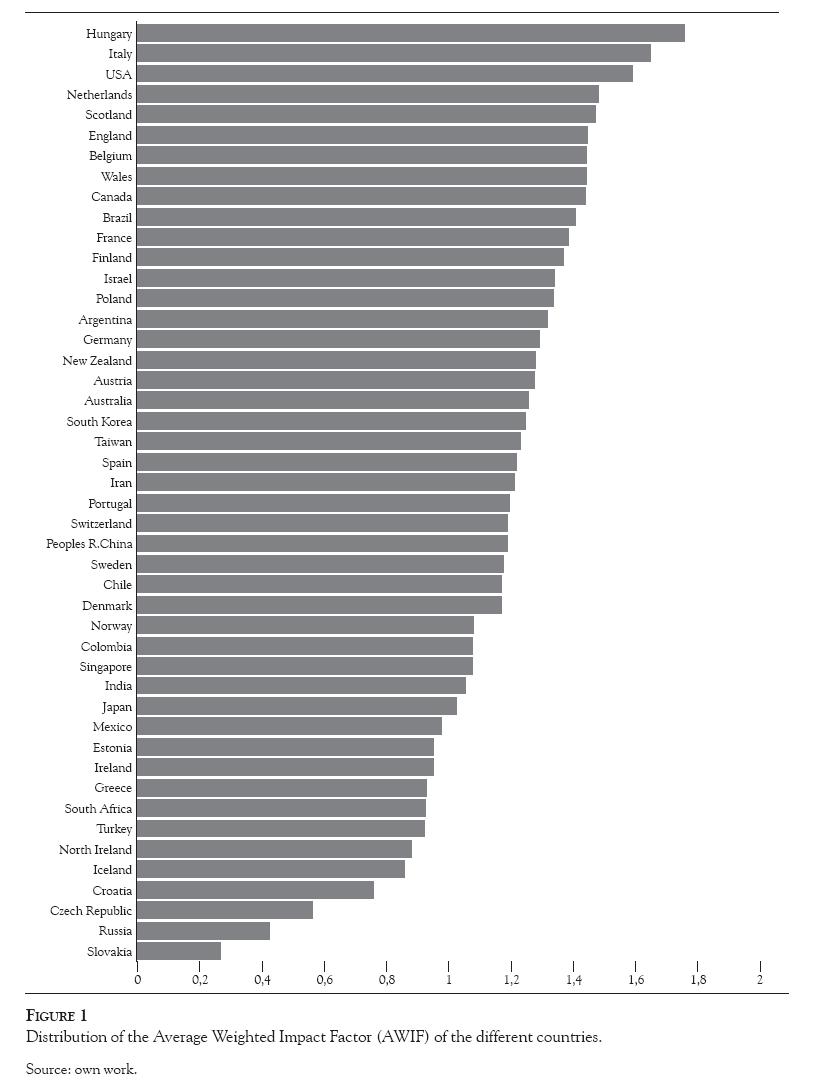

Figure 1 shows the Average Weighted Impact Factor (AWIF) of all countries making up the sample. The five countries with the highest AWIF are Hungary (1.75), Italy (1.64), the United States (1.59), Holland (1.48), and Scotland (1.47), while Slovakia (0.26), Russia (0.42), Czech Republic (0.56), Croatia (0.75), and Iceland (0.85) have the lowest values. Of the forty five countries in the sample, only 35.55% have an AWIF that exceeds the average (1.29).

The main countries with a higher Average Relative Impact Factor (ARIF) coincide with those that have a higher AWIF (see Figure 2). Therefore, the countries with a higher ARIF are Hungary (1.36), Italy (1.27), the United States (1.23), Holland (1.15), and Scotland (1.14).

Figure 3 represents a joint analysis of the Average Weighted Impact Factor (AWIF) and the Average Citation Rate per article (Ncit/Ndoc), where Hungary, Italy, the United Status, Holland, and Scotland are again the countries with the highest figures for both indicators. In the last positions are Czech Republic, Russia, and Slovakia.

The average number of citations per article in the sample is 4.33 (see Table 3). The country with the highest average citation rate is Hungary (5.94) citations per article), followed by England (5.02), Wales (4.97), Canada (4.82), and Holland (4.55), while Slovakia (0.64), Russia (0.96), Czech Republic (1.39), Greece (1.6), and Northern Ireland (1.85) have the lowest average rate of citations. Of the 45 countries, only 15.55% have a higher than average Citation Rate per article.

In Table 5 the most important countries in terms of the number of articles in the first five journals of scientific excellence (Ntop5) of the JCR are illustrated. The highest percentage of articles published in the first five journals of the JCR with regards to total production (%Ntop5) during the six-year period is held by Spain (66.32%), followed by Hungary (56.63%), Wales (55.36%), and Holland (55.14%). The United States (65.96%), England (9.9%), and Canada (7.88%) have the highest percentage of articles in the first five journals of the JCR in relation to the total production of the sample (%Ntop5-sample) (see Table 5).

Therefore, the countries with the highest production with regards to the number of articles (the United States, England, Canada, Germany, and Australia) are not the countries that have the highest percentage of publications in the first five journals of the JCR, although England, for example, is the second country in number of citations and in number of articles published in journals of scientific excellence and Canada is the fourth country in number of citations and third in the number of articles published in journals of scientific excellence. Regarding the percentage of publications in the first 5 journals of scientific excellence compared to the total production of the sample, the main countries coincide with those that have the highest production.

When production is analyzed throughout continents (Navarrete-Cortés, Quevedo-Blasco, Chaichio-Moreno, Ríos & Buela-Casal, 2009), it can be noted that Europe and North America account for 88% of world production. Specifically, Europe accounts for 55% of the total and North America 33%. The continent with the least number of documents is Africa, with 0.38%.

Finally, in Figure 4 a quantitative aspect (Navarrete- Cortés et al., 2009) is combined with a quality one. Specifically, the ARIF and number of citations were analyzed, measuring the expected and observed quality respectively, in relation to the Relative Effort Index (REI) which is the proportion of psychology articles published in comparison to a country's total scientific production. A country reaches the highest level in psychology investigation when it has a high REI, a high RIF value and a higher than average number of citations. The United States, Canada, Holland, New Zealand, and Australia are clearly amongst the leading countries in the psychology field.

Discussion

Despite the limitations that can arise from the use of the Impact Factor (IF) to analyze scientific production (Garfield, 2003), this study can help to identify the main geographical areas within the field of psychology with relation to global scientific production. The analysis showed that not all countries with a high production of articles (Ndoc), have a high Relative Impact Factor (RIF), Weighted Impact Factor (WIF) or a high number of citations.

Working with the Web of Science (WoS) can lead to the issue of not having included a journal from a category other than those analyzed, but as the database assigns journals to a specific category, depending on the origin of the citations, adequate coverage is assured. Besides, this study is based on the units of analysis, as a total of more than 100,000 documents were used from forty five different countries throughout all continents.

The countries that produced the majority of articles were not always those that produced with the highest quality. Therefore, although the AWIF was generally higher in countries with a higher than average Citation Rate (4.33 citations per article), such as England and Canada for example, there were also countries with a high AWIF, but with a low citation rate, such as Belgium, Brazil, and Poland. This could be due to the fact that these countries have a greater interest in obtaining visibility through publication in high impact journals.

On the other hand, the unexpected high number of citations per article obtained by countries which are initially less productive, could be the result of their high level of collaboration with high ranking countries, such as the case of Hungary with the United States, and England.

The best indicator of the quality of a country's production can be represented by the percentage of production held in the first five journals of the Journal Citation Report (JCR) (Table 3). According to this criterion, Spain is the most important country, followed by Hungary, Wales, and Holland. Likewise, it is important to note that Europe (55%) is the continent with the highest contribution in total production of the sample, followed by North America (33%). It is also noteworthy that the African continent contributes only 0.38% (Navarrete- Cortés et al., 2009).

In conclusion, a combined analysis of the quantitative indicator Relative Effort Index (REI) (Navarrete- Cortés et al., 2009) and the quality indicators ARIF and number of citations was carried out between the different countries (Figure 4), showing that the United States alongside New Zealand, Canada, Holland, and Australia are the countries with the highest scientific production in the psychology field in relation to general production, achieving a high quality for the future.

The United States, England, Canada, and Germany were the most productive countries in terms of number of articles. The articles from the United States and Canada, alongside articles from countries such as England, Hungary, Italy, Holland, and Wales, were those of the highest quality, with regards to the AWIF and ARIF. Hungary is the country with the highest Citation Rate in the whole sample.

For it, at present different countries are valuing the formation for the field of the psychology (Robledo- Gómez, 2008a) and even the own meaning of the same one (Clavijo, 2007; Robledo-Gómez, 2008b).

References

Alegre, O. M. & Villar, L. M. (2007). Evaluación de la formación en línea del profesorado de cinco universidades españolas. Revista de Universidad y Sociedad del Conocimiento (RUSC), 4, 1-13. [ Links ]

Alzate-Medina, G. M. (2008). Efectos de la acreditación en el mejoramiento de la calidad de los programas de psicología de Colombia. Universitas Psychologica, 7, 425-439. [ Links ]

Bengoetxea, E. & Arteaga-Ortiz, J. (2009). La evaluación de postgrados internacionales en la Unión Europea. Ejemplos de buenas prácticas de programas europeos. Revista de Universidad y Sociedad del Conocimiento (RUSC), 6, 60-68. [ Links ]

Bermúdez, M. P., Castro, A., Sierra, J. C. & Buela-Casal, G. (2009). Análisis descriptivo transnacional de los estudios de doctorado en el EEES. Revista Psicodidáctica, 14, 193-210. [ Links ]

Bignami, G., De Girolamo, G., Fava, G. A., Morosini, P. L., Pasquín, P., Pastore, V. & Tansella, M. (2000). The impact on the international literature of the scientific production of Italian researchers in the disciplines "psychiatry" and "psychology": A bibliometric evaluation. Epidemiologia e Psichiatria Sociale, 9, 11-25. [ Links ]

Bordons, M., Fernández, M. T. & Gómez, I. (2002). Advantages and limitations in the use of impact factor measures for the assessment of research performance. Scientometrics, 53, 195-206. [ Links ]

Bordons, M. & Zulueta, M. A. (1999). Evaluación de la actividad científica a través de indicadores bibliométricos. Revista Española de Cardiología, 52, 790-800. [ Links ]

Buela-Casal, G. (2003). Evaluación de la calidad de los artículos y de las revistas científicas: propuesta del factor de impacto ponderado y de un índice de calidad. Psicothema, 15, 23-35. [ Links ]

Buela-Casal, G. (2007a). Consideraciones metodológicas sobre el procedimiento de acreditación y del concurso de acceso a cuerpos de funcionarios docentes universitarios. Revista Electrónica de Metodología Aplicada, 12, 1-14. [ Links ]

Buela-Casal, G. (2007b). Reflexiones sobre el sistema de acreditación del profesorado funcionario de Universidad en España. Psicothema, 19, 473-482. [ Links ]

Buela-Casal, G., Bermúdez, M. P., Sierra, J. C., Quevedo- Blasco, R. & Castro, A. (2009). Ranking de 2008 en productividad en investigación de las universidades públicas españolas. Psicothema, 21, 309-317. [ Links ]

Buela-Casal, G., Bermúdez, M. P., Sierra, J. C., Quevedo- Blasco, R. & Castro, A. (2010). Ranking de 2009 en investigación de las universidades públicas españolas. Psicothema, 22, 171-179. [ Links ]

Buela-Casal, G. & Castro, A. (2008a). Criterios y estándares para la obtención de la Mención de Calidad en Programas de Doctorado: evolución a través de las convocatorias. International Journal of Psychology and Psychological Therapy, 8, 127-136. [ Links ]

Buela-Casal, G. & Castro, A. (2008b). Análisis de la evolución de los Programas de Doctorado con Mención de Calidad en las universidades españolas y propuestas de mejora. Revista de Investigación en Educación, 5, 49-60. [ Links ]

Buela-Casal, G. & Castro, A. (2009). Las tecnologías de la información y la comunicación y la evaluación de la calidad en la educación superior. Revista de Universidad y Sociedad del Conocimiento (RUSC), 6, 3-8. [ Links ]

Buela-Casal, G., Gutiérrez, O., Bermúdez, M. P. & Vadillo, O. (2007). Comparative study of international academic rankings of universities. Scientometrics, 71, 349-365. [ Links ]

Buela-Casal, G., Medina, A., Viedma del Jesús, M. I., Lozano, S., Torres, G. & Zych, I. (2009b). Analysis of the influence of the two types of the journal articles; theoretical and empirical on the impact factor of a journal. Scientometrics, 80, 267-284. [ Links ]

Buela-Casal, G., Perakakis, P., Taylor, M. & Checa, P. (2006). Measuring Internationality: Reflections and perspectives on academic journals. Scientometrics, 67, 45-65. [ Links ]

Buela-Casal, G. & Sierra, J. C. (2007). Criterios, in dicadores y estándares para la acreditación de profesores titulares y catedráticos de Universidad. Psicothema, 19, 537-551. [ Links ]

Buela-Casal, G., Vadillo, O., Pagani, R., Bermúdez, M. P., Sierra, J. C., Zych, I. & Castro, A. (2009a). Comparación de los indicadores de la calidad de las universidades. Revista de Universidad y Sociedad del Conocimiento (RUSC), 6, 9-21. [ Links ]

Buela-Casal, G., Zych, I., Sierra, J. C. & Bermúdez, M. P. (2007). The Internationality Index of the Spanish Psychology Journals. International Journal of Clinical and Health Psychology, 7, 899-910. [ Links ]

Castro, A. & Buela-Casal, G. (2008). La movilidad de profesores y estudiantes en Programas de Doctorado: ranking de las universidades españolas. Revista de Investigación en Educación, 5, 61-74. [ Links ]

Clavijo, A. (2007). Lo psicológico como un evento. Universitas Psychologica, 6, 699-711. [ Links ]

Csajbók, E., Berhidi, A., Vasas, L. & Schubert, A. (2007). Hirsch-index for countries based on Essential Science Indicators data. Scientometrics, 73, 91-117. [ Links ]

Del Río, L. (2008). Cómo implantar y certificar un sistema de gestión de la calidad en la Educación Superior. Revista de Investigación en Educación, 5, 5-11. [ Links ]

Egghe, L. & Rao, I. K. R. (2008). Study of different hindices for groups of authors. Journal of the American Society for Information Science and Technology, 59, 1276-1281. [ Links ]

Fainholc, B. (2006). Rasgos de las universidades y de las organizaciones de educación superior para una sociedad del conocimiento, según la gestión del conocimiento. Revista de Universidad y Sociedad del Conocimiento (RUSC), 3, 1-10. [ Links ]

Fava, G. A. & Montonary, A. (1997). National trends of research in psychology and psychiatry (1981- 1995). Psychotherapy and Psychosomatics, 66, 169- 174. [ Links ]

Fava, G. A. & Ottolini, F. (2000). Impact factor versus actual citation. Psychotherapy and Psychosomatics, 69, 285-286. [ Links ]

Fava, G. A., Ottolini, F. & Sonino, N. (2001). Which are the leading countries in clinical medicine research? A citation Analysis (1981-1998). Psychotherapy and Psychosomatics, 70, 283-287. [ Links ]

García-Berro, E., Dapia, F., Amblás, G., Bugeda, G. & Roca, S. (2009). Estrategias e indicadores para la evaluación de la docencia en el marco del EEES. Revista de Investigación en Educación, 6, 142-152. [ Links ]

García-Martínez, A. T., Guerrero-Bote, V., Hassan- Montero, Y. & Moya-Anegón, F. (2008). La Psicología en el dominio científico español a través del análisis de cocitación de revistas. Universitas Psychologica, 8, 13-26. [ Links ]

Garfield, E. (2003). The meaning of the impact factor. International Journal of Clinical and Health Psychology, 3, 363-369. [ Links ]

Gil Roales-Nieto, J. (2009). Análisis de los estudios de doctorado en psicología con mención de calidad en universidades españolas. Revista de Investigación en Educación, 6, 160-172. [ Links ]

Gómez, I. & Bordons, M. (1996). Limitaciones en el uso de los indicadores bibliométricos para la evaluación científica. Política Científica, 46, 21-26. [ Links ]

González, F., Macías, E., Rodríguez, M. & Aguilera, J. L. (2009). Prospectiva y evaluación del ejercicio docente de los profesores universitarios como exponente de buena calidad. Revista de Universidad y Sociedad del Conocimiento (RUSC), 6, 38-48. [ Links ]

Hirsch, J. E. (2005). An index to quantify an individual's scientific research output. Proceedings of the National Academy of Sciences of the U.S.A., 102, 16569- 16572. [ Links ]

Institute of Higher Education, Shangai Jiao Tong University. (2008). Academic Ranking of Wolrd Universities. Available in http://www.arwu.org [ Links ]

Internacional Ranking Expert Group. (2006). Berlin Principles on Ranking of Higher Education Institutions. Available in http://www.che.de/downloads/Berlin_Principles_IREG_534.pdf [ Links ]

Moed, H. F., Bruin, R. E. & Van Leeuwen, T. N. (1995). New bibliometric tools for the assessment of national research performance: Database description, overview of indicators and first application. Scientometrics, 33, 381-422. [ Links ]

Molinari, J. F. & Molinari, A. (2008). A new methodology for ranking scientific institutions. Scientometrics, 75, 163-174. [ Links ]

Montero, I. & León, O. G. (2007). A guide for naming research studies in Psychology. International Journal of Clinical and Health Psychology, 7, 847-862. [ Links ]

Moya, F. (Dir.). (2004). Indicadores bibliométricos de la actividad científica española. Madrid: FECYT. [ Links ]

Moyano, M., Delgado, C. J. & Buela-Casal, G. (2006). Análisis de la productividad científica de la Psiquiatría española a través de las tesis doctorales en la base de datos TESEO (1993-2002). International Journal of Psychology and Psychological Therapy, 6, 111-120. [ Links ]

Muñiz, J. & Fonseca-Pedrero, E. (2008). Construcción de instrumentos de medida para la evaluación universitaria. Revista de Investigación en Educación, 5, 13-25. [ Links ]

Musi-Lechuga, B., Olivas-Ávila, J. & Buela-Casal, G. (2009). Producción científica de los programas de doctorado en Psicología Clínica y de la Salud de España. International Journal of Clinical and Health Psychology, 9, 161-173. [ Links ]

Musi-Lechuga, B., Olivas-Ávila, J., Portillo-Reyes, V. & Villalobos-Galvis, F. (2005). Producción de los profesores funcionarios de Psicología en España en artículos de revistas con factor de impacto de la Web of Science. Psicothema, 17, 539-548. [ Links ]

Navarrete-Cortés, J., Quevedo-Blasco, R., Chaichio- Moreno, J. A., Ríos, C. & Buela-Casal, G. (2009). Análisis cuantitativo por países de la productividad en psicología de las revistas en la Web of Science. Revista Mexicana de Psicología, 26, 131-143. [ Links ]

Opthog, T. (1997). Sense and nonsense about the impact factor. Cardiovascular Research, 33, 1-7. [ Links ]

Osuna, E. (2009). Calidad y financiación de la universidad. Revista de Investigación en Educación, 6, 133-141. [ Links ]

Pagani, R., Vadillo, O., Buela-Casal, G., Sierra, J. C., Bermúdez, M. P., Gutiérrez-Martínez, O. et al. (2006). Estudio internacional sobre criterios e indicadores de calidad de las universidades. Madrid: ACAP. [ Links ]

Pelechano, V. (2002). ¿Valoración de la actividad científica en Psicología? ¿Pseudoproblema, sociologismo o idealismo? Análisis y Modificación de Conducta, 28, 323-362. [ Links ]

Pires da Luz, M., Marques-Portella, C., Mendlowicz, M., Gleiser, S., Freire Coutinho, E. S. & Figueira, I. (2008). Institutional h-index: The performance of a new metric in the evaluation of Brazilian Psychiatric Post-graduation Programs. Scientometrics, 77, 361-368. [ Links ]

Rahman, M. & Fukui, T. (2000). Biomedical research productivity in Asian countries. Journal of Epidemioly, 10, 290-291. [ Links ]

Ramos-Álvarez, M., Moreno-Fernández, M. M., Valdés- Conroy, B. & Catena, A. (2008). Criteria of the peer review process for publication of experimental and quasi-experimental research in Psychology: A guide for creating research papers. International Journal of Clinical and Health Psychology, 8, 751-764. [ Links ]

Rivera-Garzón, D. M. (2008). Caracterización de la comunidad científica de Psicología que publica en la revista Universitas Psychologica (2002-2008). Universitas Psychologica, 7, 917-932. [ Links ]

Roca, S., Villegas, N., Viñolas, B., García-Tornel, A. J. & Aguado, A. (2008). Evaluación y jerarquización de departamentos universitarios mediante análisis de valor. Revista de Investigación en Educación, 5, 27-40.

Robledo-Gómez, A. M. (2008a). La formación de psicólogas y psicólogos en Colombia. Universitas Psychologica, 7, 9-18. [ Links ]

Robledo-Gómez, A. M. (2008b). Pensar la psicología hoy. Universitas Psychologica, 7, 911-916. [ Links ]

Rueda-Clausen, C. F., Villa-Roel, C. & Rueda-Clausen, C. E. (2005). Indicadores bibliométricos: origen, aplicación, contradicción y nuevas propuestas. Revista MedUNAB, 8, 29-36. [ Links ]

Ruiz-Pérez, R., Delgado, E. & Jiménez-Contreras, E. (2006). Criterios del Institute for Scientific Information para la selección de revistas científicas. Su aplicación a las revistas españolas: metodología e indicadores. International Journal of Clinical and Health Psychology, 6, 401-424. [ Links ]

Schubert, A. & Glänzel, W. (2007). A systematic analysis of Hirsch-type indices for journals. Journal of Informetrics, 1, 179-184. [ Links ]

Seglen, P. O. (1997). Citation and journal factors: Questionable indicators of research quality. Allergy, 52, 1050-1056. [ Links ]

Sierra, J. C., Buela-Casal, G., Bermúdez, M. P. & Santos- Iglesias, P. (2008). Análisis transnacional del sistema de evaluación y selección del profesorado universitario. Interciencia, 33, 251-257. [ Links ]

Sierra, J. C., Buela-Casal, G., Bermúdez, M. P. & Santos- Iglesias, P. (2009a). Diferencias por sexo en los criterios y estándares de productividad científica y docente en profesores funcionarios en España. Psicothema, 21, 124-132. [ Links ]

Sierra, J. C., Buela-Casal, G., Bermúdez, M. P. & Santos- Iglesias, P. (2009b). Importancia de los criterios e indicadores de evaluación y acreditación del profesorado funcionario universitario en los distintos campos de conocimiento de la UNESCO. Revista de Universidad y Sociedad del Conocimiento (RUSC), 6, 49-59. [ Links ]

Sternberg, R. J. (2001). Where was it published? Observer, 14, 3. [ Links ]

Thompson, D. F. (1999). Geography of U.S. biomedical publications, 1990 to 1997. The New England Journal of Medicine, 340, 817-818. [ Links ]

Tol, R. S. J. (2008). A rational, successive g-index applied to economics departments in Ireland. Journal of Informetrics, 2, 149-155. [ Links ]

Van Raan, A. F. J. (1999). Advanced bibliometric methods for the evaluation of Universities. Scientometrics, 45, 417-423. [ Links ]

Villalobos-Galvis, F. & Puertas-Campanario, R. (2007). Impacto e internacionalidad de tres revistas iberoamericanas en revistas de psicología de España. Revista Latinoamericana de Psicología, 39, 593-608. [ Links ]

Viñolas, B., Aguado, A., Josa, A., Villegas, N. & Fernández- Prada, M. A. (2009). Aplicación del análisis de valor para una evaluación integral y objetiva del profesorado universitario. Revista de Universidad y Sociedad del Conocimiento (RUSC), 6, 22-37. [ Links ]

Zych, I. & Buela-Casal, G. (2007). Análisis comparativo de los valores en el Índice de Internacionalidad de las revistas iberoamericanas de psicología incluidas en la Web of Science. Revista Mexicana de Psicología, 24, 7-14. [ Links ]

Zych, I. & Buela-Casal, G. (2009). The Internationality Index: Application to Revista Latinoamericana de Psicología. Revista Latinoamericana de Psicología, 41, 401-412. [ Links ]

Recibido: agosto de 2009

Revisado: octubre de 2009

Aceptado: noviembre 1 de 2009

* Article of investigation in bibliometric.

** Access to Document Service, University Library, University of Jaén (Spain), 21071. E-mail: jcortes@ujaen.es

*** Junta of Andalusia, Seville (Spain), 41071. E-mail: jafl@us.es

**** Junta of Andalusia, Córdoba (Spain), 14006. Email: ajlopez@ucua.es

***** Faculty of Psychology, University of Granada, Granada (Spain), 18071. E-mails: rquevedo@ugr.es; gbuela@ugr.es